Von Neumann probes are going to turn the universe into grey goo. Or at least that’s what I’m led to believe by most of the fictional representations of their existence. The negative consensus is that these self-replicating exploration probes will go mindlessly off-mission and keep going until they’ve turned every useful atom in the universe into one of their offspring.

It would be irresponsible for us or any other sentient biological race to create them. No, we biological folk would treat the universe much more ethically.

Ummm… why is that?

Humans have committed most of the supposed Von Neumann sins here on Earth over our long climb up to dominance. Why is that we trust a human colonization wave of self-replicating people more than the machines?

Is it merely that we would replicate more slowly? That doesn’t make much difference over astronomical time scales.

Is it that being biological makes us less prone to mistakes or bad ideas? Looking at some of the shit from our history does not bolster any sense of human infallibility.

Or is it simply that we humans have reached the point where we realize that unleashing a wave of unthinking resource eaters upon the universe would be a bad idea? Yes, we are wise, and so we know far better than any machine ever could.

Bullshit.

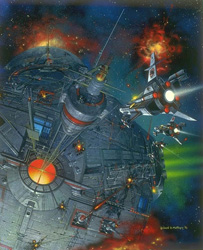

I think the big disconnect for me is that we imagine we can create a self-replicating spacecraft capable of travelling to a new star system, exploring it, mining it for resources, building and launching child craft to carry on, perform its actual mission in that system, and yet not have sufficient intelligence and stability to stay on mission and not go all wonky and consume the universe.

Smart folks have suggested various ways to limit them by only producing so many offspring or other such hard-rule limits, but I feel they’re missing the more obvious solution: just make them as smart as we are and see to it that they have high ethical standards.

That may seem like I’m asking a lot, but given that we’re nowhere close to being able to launch interstellar probes at even one percent the speed of light or build self-replicating machines, I think that the AI folks have some time on their hands. Even pushing back the supposedly impending singularity back a couple of hundred years, I suspect our computing resources will get there before our spacecraft capabilities are ready.

Heck, if the “upload your brain” folks ever get their wish, it might effectively be us humans popping out into the cosmos. Send a lot of us, as many as you can cram into the silicon. Fifty or a hundred human minds aboard each probe ought to be able to make sound decisions about not smelting the entire universe. Plus, they’d be able to keep one another company.

I’d still worry a bit about one group going a little fanatical, so I’d ensure some cross-fertilization. Plot out the exploration so that two more child ships are sent to the same location but from multiple parent ships. Upon arriving together, the outgoing child ships would get a mixed crew of the minds from different parent crews. Then don’t leave operational machinery behind. Do your thing and move on. The vast majority of resources will be remain untouched.

It doesn’t seem that hard to me to plan something that won’t go catastrophically off-mission. Just give them the good sense that we seem to have.

Or is that the good sense we hope we have?